Although apartheid in South Africa ended in the early 1990s, its shadows, penumbra and contour still exist. Colourism is generally considered as a form of discrimination, antagonism and prejudice, happen typically within a particular racial and ethnic group, advocating people with lighter skin colour over those who are dark-skinned.

This lethal and detrimental scheme of bigotry and prejudice is frequently adumbrated within a debate and analysis on racism; however, it affects with a wider chaff and swath of people across multiple populations.

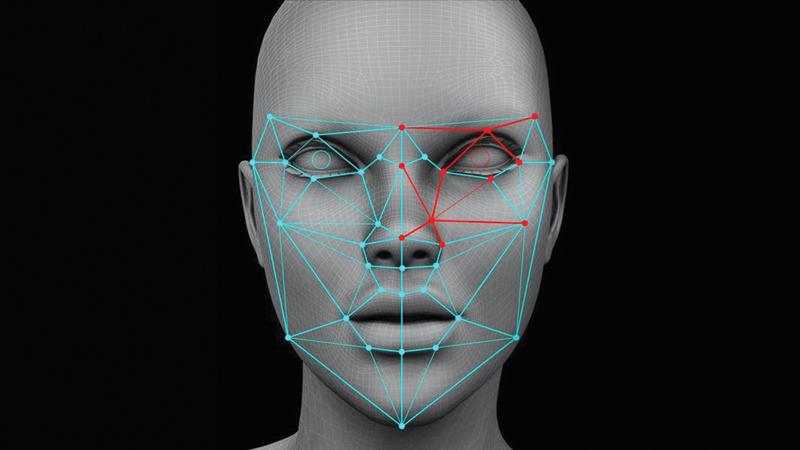

Meanwhile, a considerable number of researchers across the globe have shown their uttermost interest in furthering their research studies, conducted on facial recognition technology that is an integral part in evaluating the racial bias of its algorithms.

Facial recognition technology is currently not a strange phenomenon but has ever since been augmenting by leaps and bounds. Some commercial software by now have evolved into greater heights where they are even able to classify the gender of an individual in a portrait. But, in contrast, the precision and perfection involved with the software is not universal, albeit facial recognition algorithms may often come up with a braggadocio on high classification accuracy which may even stand over 90%.

Fresh ground

Research indicates that there is a colour bias with the facial recognition technology and it is said that the software shows an accuracy of 99% when the individual in the portrait is white skinned.

Research indicates that there is a colour bias with the facial recognition technology and it is said that the software shows an accuracy of 99% when the individual in the portrait is white skinned.

Dr. Krishantha Kapugama, a scholar and diligent academic whose researches predominantly run through the domain of biometrics, breaking fresh ground by expanding as to how the technology works on people who are of different ethnicities and gender, said, “Darker the skin, the more errors spring up: out of which approximately 35 percent of the photos belongs to the women with a darker skin complexion.”

Dr. Kapugama outlined the unfolding of disparate and atypical inaccuracy and glitch rates across demographic cohorts where the infinitesimal veracity routinely was noticed in females with darker skin tones, comprising of the age group of 18-30 years.

The 2018 “gender shades” project was a landmark approach. Evaluating the precision and the certainty of the Artificial Intelligence (AI) powdered gender classification products comes under the preview of the gender shades project.

The significance of this project is the fact that it applied an intersectional approach in order to assess three genders. Classification algorithms among which those developed by IBM and Microsoft were also used. The subjects were cohorted into four different categories: women with darker skin tones, men with darker skin tones, women with lighter skin tones and men with lighter skin tones. It was reported that every algorithm taken into perusal performed the worst on the women with darker skin tones where an error rate rising up to 34% higher than that of the men with lighter skin tones was identified.

It is also reported that an independent appraisal conducted by the National Institute of Standards and Technology (NIST) of the United States has also affirmed that these studies findings that face recognition technologies across 189 algorithms showcase a lower degree of accuracy on the women of colour. It is also remarked that if the number of women with darker skin tones is lesser than that of the number of men with lighter skin tones, identifying the former would be utterly troublesome. Citing another research, Dr. Kapugama reassured and reiterated the fact that one frequently used facial recognition data set was likely to be more than 75% male as well as more than 80% white.

As noted by Dr. Kapugama, these novel studies augment wider issues of accountability and impartiality in artificial intelligence at a time where its applicability, adoption and significance are equally felt. In the current context, organisations in so many distinct ways are deploying facial recognition technology which includes the assistance given on the target product pitches, and positioned social media profile pictures.

Researchers at the Georgetown Law School said that around 117 million American adults are in face recognition networks used by the law enforcement authorities, where it was reported that African Americans were predominantly singled out, as they were unequally represented in mug-shot databases.

Anecdotal evidence

Researchers suggest that facial recognition technology should be regulated to mitigate the negative consequences of wrong evaluation and to make it socially accountable. There has been anecdotal evidence on computer vision flub, and that paved the way for discrimination. In that light, Google’s apology, made following its image recognition photo app originally termed African Americans as ‘gorillas’ is a classic example that demonstrates the likelihood of mistakes occurring within the facial recognition technology. Scholars suspect that facial recognition software perform differently on different populations.

Citing the findings of the Massachusetts Institute of Technology in Cambridge, Dr. Kapugama said that the performances of face recognition software packages were substandard in distinguishing the gender of females and people of colour compared with its performance in classifying males with lighter skin tones.

This has aroused the concern of the experts of this particular field over a demographic bias and since then there has been a growing demand either for a moratorium or a ban of facial recognition software.

Dr. Kapugama said that users across the world are affected by digital discrimination that get them exposed to a form of unscrupulous and inequitable treatments based on machine learning or algorithmic personal data gathering systems. One may be flabbergasted to hear that racism and colourism still do prevail in some societies, even when the world is on the verge of global technological evolution.

The bitter truth is that some societies racism and colourism are yet like the twin of terrorism. According to researchers there is racist discrimination in machine decision-making, spotlighting the central argument on racism prevailing within the facial recognition technology and its implementation. It is noticed that the central issue remains within the constraints and the limitations of bias in algorithmic systems, accentuating different characteristics as well as that of different shapes of digital discrimination.

According to very recent publications, facial recognition is congenitally racist, as in some cases, their accuracy in identifying the people with darker skin tones is doubtful and questionable. Dr. Kapugama in conclusion emphasised on the fact facial recognition cites an archaic statistic that females with darker skin tones are five times more vulnerable than that of males with lighter skin tones to be misidentified by facial recognition algorithms.